Softmax

In the vast landscape of machine learning and artificial intelligence, the Softmax function stands as a pivotal tool, particularly within the realm of neural networks. It plays a crucial role in various applications, including image classification, natural language processing, and reinforcement learning. But what exactly is Softmax, and why is it so essential? Let’s delve into the intricacies of this mathematical gem.

The Basics of Softmax Function

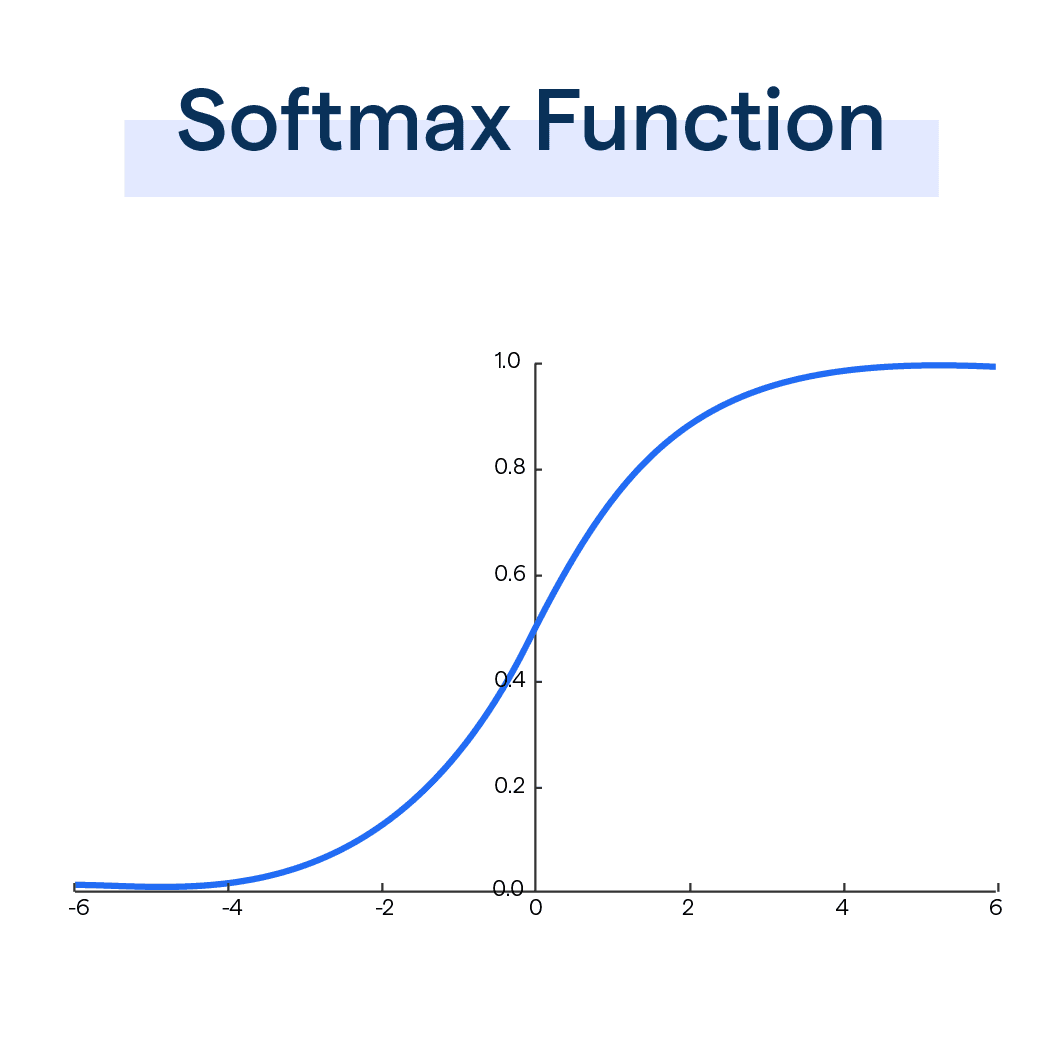

At its core, Softmax is a mathematical function that takes as input a vector of arbitrary real-valued scores and transforms them into a probability distribution. The function ensures that the output values are non-negative and sum up to one, making it ideal for tasks where we need to represent multiple classes with probabilities.

Key Properties of Softmax

- Normalization: Softmax ensures that the output probabilities sum up to one, thereby representing a valid probability distribution. This property is crucial for tasks like classification, where each class must have a probability associated with it.

- Sensitivity to Input Magnitudes: The Softmax function is sensitive to the magnitudes of its inputs. Large inputs will produce significantly larger outputs, amplifying the differences between the input scores. This property can be both advantageous and disadvantageous, depending on the application.

- Differentiability: Softmax is differentiable, making it compatible with gradient-based optimization algorithms like stochastic gradient descent (SGD). This property is fundamental for training neural networks using techniques like backpropagation.

Applications of Softmax

- Classification: Softmax is widely used in multi-class classification tasks, where the model needs to assign probabilities to multiple classes. For instance, in image classification, Softmax can predict the probability of an image belonging to each class in a predefined set of categories.

- Policy Networks in Reinforcement Learning: In reinforcement learning, Softmax is often used to parameterize the policy function, which determines the agent’s actions based on the current state. By applying Softmax to the action preferences, the agent can select actions probabilistically, balancing between exploration and exploitation.

- Natural Language Processing (NLP): Softmax is employed in various NLP tasks, such as language modeling and sentiment analysis. In language modeling, Softmax is used to predict the next word in a sequence based on the probabilities of possible words.

Challenges and Considerations

While Softmax offers numerous advantages, it’s not without its challenges. One common issue is the potential for numerical instability when dealing with large input values, leading to overflow or underflow errors. Techniques like log-sum-exp trick are often employed to address this problem.

Moreover, Softmax tends to amplify the differences between input scores, which can exacerbate the issue of class imbalance in classification tasks. Techniques like class weighting or incorporating regularization can help alleviate this issue.

Conclusion

In the intricate tapestry of neural networks and machine learning, the Softmax function shines as a cornerstone, enabling models to make probabilistic predictions across multiple classes. Its elegant mathematical formulation, coupled with essential properties like normalization and differentiability, makes it indispensable in a wide array of applications.

As research in the field continues to evolve, Softmax remains a fundamental tool in the arsenal of machine learning practitioners, serving as a bridge between raw scores and meaningful probabilities, unlocking the power of predictive modeling and decision-making.