Embedding

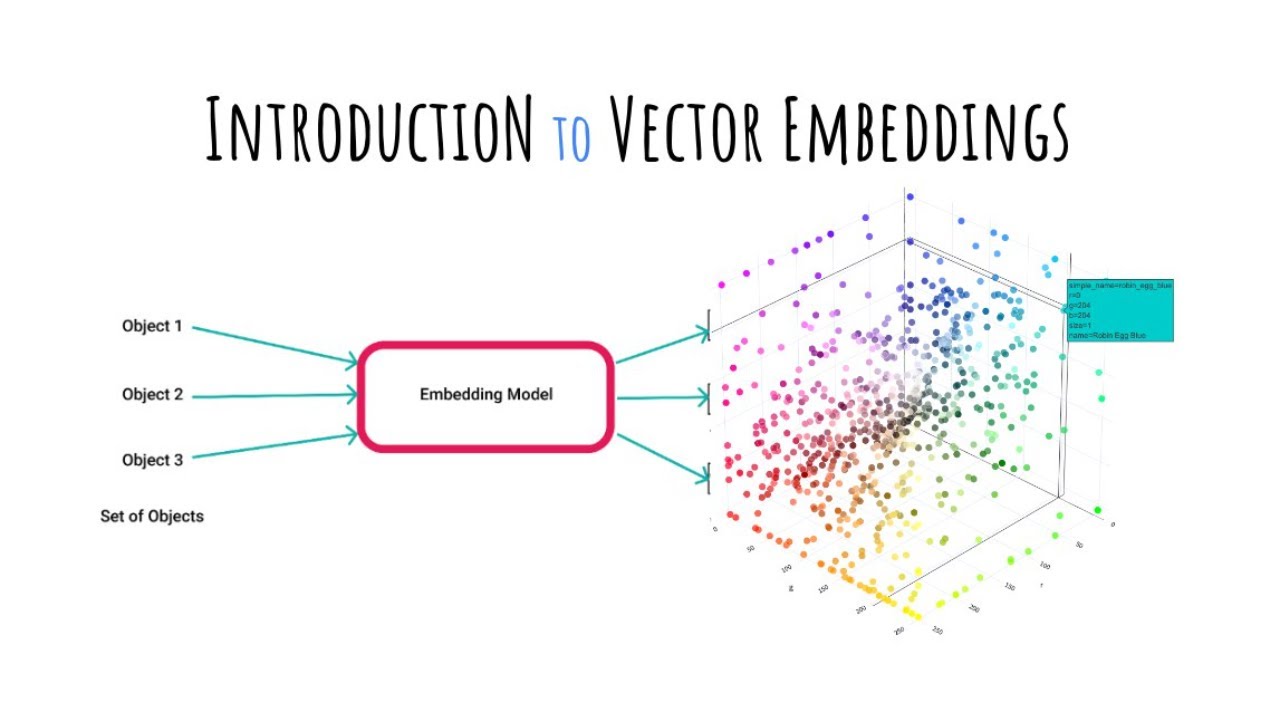

In the expansive realm of artificial intelligence, the concept of embedding serves as a linchpin, enabling machines to comprehend, categorize, and interpret data with remarkable efficiency. At its core, embedding encapsulates the transformation of raw, unstructured data into a structured form, thereby facilitating nuanced analysis and understanding. From natural language processing to image recognition and beyond, embedding techniques underpin a plethora of AI applications, driving advancements across diverse domains.

Understanding Embedding:

At its essence, embedding involves the conversion of high-dimensional data into lower-dimensional representations while preserving essential features and relationships. This process is particularly prominent in natural language processing (NLP), where words are transformed into dense vectors, often referred to as word embeddings. These vectors encode semantic and syntactic similarities between words, capturing their contextual nuances within a given corpus.

Word Embedding Techniques:

Several methodologies exist for generating word embeddings, each with its unique strengths and applications. Among the most renowned approaches are:

- Word2Vec: Introduced by researchers at Google, Word2Vec utilizes shallow neural networks to learn word embeddings from large text corpora. By leveraging continuous bag-of-words (CBOW) or skip-gram models, Word2Vec effectively captures semantic relationships between words, enabling tasks such as word similarity calculation and context prediction.

- GloVe (Global Vectors for Word Representation): Developed by Stanford University researchers, GloVe combines global word co-occurrence statistics with matrix factorization techniques to generate word embeddings. Unlike Word2Vec, GloVe considers the entire corpus during training, thereby incorporating global context into its embeddings and yielding robust representations.

- BERT (Bidirectional Encoder Representations from Transformers): Representing a paradigm shift in NLP, BERT employs transformer architectures to generate contextualized word embeddings. By considering both left and right contexts during training, BERT captures intricate semantic nuances and syntactic structures, making it highly effective for tasks such as sentiment analysis, named entity recognition, and question answering.

Beyond Words: Embedding in Multimodal Data:

While word embedding remains a cornerstone of NLP, embedding techniques have transcended linguistic boundaries to encompass multimodal data types, including images, audio, and video. Multimodal embedding techniques aim to fuse information from disparate modalities into a unified embedding space, enabling holistic understanding and analysis.

- Image Embedding: Convolutional neural networks (CNNs) are commonly employed for image embedding, wherein images are transformed into feature vectors capturing their visual characteristics. These embeddings facilitate tasks such as image classification, object detection, and image retrieval, empowering AI systems with visual understanding capabilities.

- Audio Embedding: Similar to image embedding, audio embedding involves extracting meaningful representations from audio signals. Techniques such as spectrogram analysis and deep neural networks enable the generation of audio embeddings conducive to tasks such as speech recognition, speaker identification, and sound event detection.

Applications Across Domains:

The versatility of embedding techniques has catalyzed breakthroughs across a myriad of domains, revolutionizing industries and enhancing human-machine interactions. Some notable applications include:

- Recommendation Systems: Embedding-based recommendation systems leverage user and item embeddings to personalize recommendations, enhancing user engagement and satisfaction across e-commerce platforms, streaming services, and social media.

- Healthcare: In healthcare, patient embeddings derived from electronic health records facilitate clinical decision support, disease prediction, and medical image analysis, empowering clinicians with actionable insights and improving patient outcomes.

- Finance: Embedding techniques underpin algorithmic trading strategies, fraud detection systems, and credit risk assessment models in the financial sector, enabling institutions to mitigate risks and optimize decision-making processes.

Future Perspectives:

As AI continues to evolve, embedding techniques are poised to play an increasingly pivotal role in driving innovation and unlocking new frontiers. Emerging research avenues, such as graph embedding for network analysis and knowledge graph embedding for semantic representation learning, hold promise for addressing complex real-world challenges and advancing AI capabilities.

Conclusion

Embedding serves as a cornerstone of artificial intelligence, enabling machines to transform raw data into actionable insights across diverse modalities and domains. By harnessing the power of embedding, we embark on a journey towards AI systems endowed with human-like understanding and intelligence, poised to reshape the fabric of our society in profound ways.